NVIDIA announced KAI Scheduler, the open-source version of the NVIDIA Run:ai Scheduler, available as a new Apache 2.0 licensed open-source project. The NVIDIA Run:ai scheduler is an AI workload and GPU orchestration tool designed to manage and optimize compute resources across Kubernetes clusters. It is derived from and will remain an integral piece of the NVIDIA Run:ai platform.

NVIDIA is committed to ensuring alignment with no forks or divergence and aims to foster an active and collaborative community where contributions, feedback, and new ideas are encouraged. By working with the open-source community, we believe this project can evolve to meet the needs of various use cases, enabling further capabilities and continued innovation.

In this post, we provide an overview of KAI Scheduler’s technical details, highlight its value for IT and ML teams, and explain the scheduling cycle and actions.

Benefits of KAI Scheduler

Managing AI workloads on GPUs and CPUs presents a number of challenges that traditional resource schedulers often fail to meet. The scheduler was developed to specifically address these issues:

- Managing fluctuating GPU demands

- Reduced wait times for compute access

- Resource guarantees or GPU allocation

- Seamlessly connecting AI tools and frameworks

Managing fluctuating GPU demands

AI workloads can change rapidly. For instance, you might need only one GPU for interactive work (for example, for data exploration) and then suddenly require several GPUs for distributed training or multiple experiments. Traditional schedulers struggle with such variability.

The KAI Scheduler continuously recalculates fair-share values and adjusts quotas and limits in real time, automatically matching the current workload demands. This dynamic approach helps ensure efficient GPU allocation without constant manual intervention from administrators.

Reduced wait times for compute access

For ML engineers, time is of the essence. The scheduler reduces wait times by combining gang scheduling, GPU sharing, and a hierarchical queuing system that enables you to submit batches of jobs and then step away, confident that tasks will launch as soon as resources are available and in alignment of priorities and fairness.

To further optimize resource usage, even in the face of fluctuating demand, the scheduler employs two effective strategies for both GPU and CPU workloads:

- Bin-packing and consolidation: Maximizes compute utilization by combating resource fragmentation—packing smaller tasks into partially used GPUs and CPUs—and addressing node fragmentation by reallocating tasks across nodes.

- Spreading: Evenly distributes workloads across nodes or GPUs and CPUs to minimize the per-node load and maximize resource availability per workload.

Resource guarantees or GPU allocation

In shared clusters, some researchers secure more GPUs than necessary early in the day to ensure availability throughout. This practice can lead to underutilized resources, even when other teams still have unused quotas.

KAI Scheduler addresses this by enforcing resource guarantees. It ensures that AI practitioner teams receive their allocated GPUs, while also dynamically reallocating idle resources to other workloads. This approach prevents resource hogging and promotes overall cluster efficiency.

Seamlessly connecting AI tools and frameworks

Connecting AI workloads with various AI frameworks can be daunting. Traditionally, teams face a maze of manual configurations to tie together workloads with tools like Kubeflow, Ray, Argo, and the Training Operator. This complexity delays prototyping.

KAI Scheduler addresses this by featuring a built-in podgrouper that automatically detects and connects with these tools and frameworks—reducing configuration complexity and accelerating development.

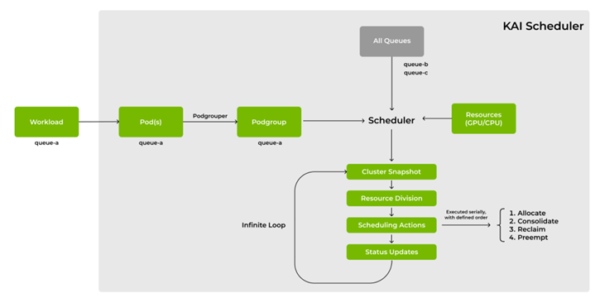

Alt text: The scheduling process diagram shows a workload assigned to a queue, which generates pods grouped into a podgroup. The podgroup is sent to the scheduler, which considers workloads from multiple queues. The scheduler operates in an infinite loop, performing a series of steps: taking a cluster snapshot (GPUs and CPUs), dividing resources, executing scheduling actions (allocation, consolidation, reclamation, and preemption), and updating the cluster status. The system ensures efficient resource allocation and management within defined execution orders.

Core scheduling entities

There are two main entities for KAI Scheduler: podgroups and queues.

Podgroups

Podgroups are the atomic units for scheduling and represent one or more interdependent pods that must be executed as a single unit, also known as gang scheduling. This concept is vital for distributed AI training frameworks like TensorFlow or PyTorch.

Here are the key podgroup attributes:

- Minimum members: Specifies the minimum number of pods that must be scheduled together. If the required resources are unavailable for all members, the podgroup remains pending.

- Queue association: Each podgroup is tied to a specific scheduling queue, linking it to the broader resource allocation strategy.

- Priority class: Determines the scheduling order relative to other podgroups, influencing job prioritization.

Queues

Queues serve as the foundational entities for enforcing resource fairness. Each queue has specific properties that guide its resource allocation:

- Quota: The baseline resource allocation guaranteed to the queue.

- Over-quota weight: Influences how additional, surplus resources are distributed beyond the baseline quota amongst all queues.

- Limit: Defines the maximum resources that the queue can consume.

- Queue priority: Determines the scheduling order relative to other queues, influencing queue prioritization.

Architecture and scheduling cycle

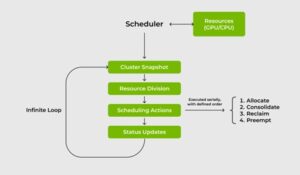

At its core, the scheduler operates by continuously capturing the state of the Kubernetes cluster, computing optimal resource distribution, and applying targeted scheduling actions.

Alt text: The diagram shows the internal workflow of the KAI scheduler responsible for managing GPU and CPU resources. The scheduler operates in an infinite loop, continuously executing four key processes: taking a cluster snapshot, dividing available resources, performing scheduling actions, and updating the cluster status. Scheduling actions are executed in a defined order: allocation, consolidation, reclamation, and preemption.

The process is organized into the following phases:

- Cluster snapshot

- Resource division and fair-share computation

- Scheduling actions

Cluster snapshot

The scheduling cycle starts with capturing a complete snapshot of the Kubernetes cluster. During this phase, the current state of nodes, podgroups, and queues is recorded.

Kubernetes objects are transformed into internal data structures, preventing mid-cycle inconsistencies and ensuring that all scheduling decisions are based on a stable view of the cluster.

Resource division and fair-share computation

With an accurate snapshot in hand, a fair share is calculated for each scheduling queue by the resource division algorithm. The result of this algorithm is a numerical value that represents the optimal amount of resources that a queue should get to maximize fairness among all queues in the cluster.

The division algorithm has the following phases:

- Deserved-quota: Each scheduling queue is initially allocated resources equal to its baseline quota. This guarantees that every department, project, or team gets the minimum resources to which it is entitled.

- Over-quota: Any remaining resources are distributed among queues that are unsatisfied, in proportion to their over-quota weight. This iterative process fine-tunes the fair-share for each queue, dynamically adapting to workload demands.

Scheduling actions

With fair shares computed, the scheduler applies a series of targeted actions to align current allocations with the computed optimal state:

- Allocate: Pending jobs are assessed based on their allocated-to-fair-share ratio. Jobs that fit available resources are immediately bound, while those requiring resources currently being freed are queued for pipelined allocation.

- Consolidation: For training workloads, the scheduler builds an ordered queue of the remaining pending training jobs. It iterates over them and tries to allocate them by moving currently allocated pods to a different node. This process minimizes resource fragmentation, freeing contiguous blocks for pending jobs.

- Reclaim: To enforce fairness, the scheduler identifies queues consuming more than their fair share. It then evicts selected jobs based on well-defined strategies—ensuring that under-served queues receive the necessary resources.

- Preempt: Within the same queue, lower-priority jobs can be preempted in favor of high-priority pending jobs, ensuring that critical workloads are not starved due to resource contention.

Example scenario

Imagine a cluster with three nodes, each equipped with eight GPUs, for 24 GPUs in total. There are two projects going on: project-a and project-b. They are associated with queue-a (medium priority queue) and queue-b (high priority queue), respectively. Assume that all queues are within their fair share. Figure 3 shows the current state.

Alt text: A diagram shows a cluster with three nodes, each containing eight GPUs. Jobs are assigned from two priority queues: a high-priority queue and a medium-priority queue . Node 1 runs Training Jobs 1 and 2, with the high-priority job using four GPUs and the medium-priority job using two GPUs. Node 2 is occupied by Training Job 3, a medium-priority job using six out of eight GPUs. Node 3 hosts an interactive job from the medium-priority queue, using five out of eight GPUs.

- Node 1: Training Job 1 uses four GPUs from the high-priority queue and Training Job 2 uses two GPUs.

- Node 2: Training Job 3 uses six GPUs.

- Node 3: An Interactive job, which can range from data exploration or script debugging, uses 5 GPUs.

Now there are two pending jobs submitted to the queue (Figure 4):

- Training Job A requires four contiguous GPUs on a single node.

- Training Job B requires three contiguous GPUs submitted to a high-priority queue.

Alt text: A diagram shows that two training jobs, Training Job A and Training Job B, are submitted with GPU requests of four and three, respectively. Training Job A belongs to the medium-priority queue (queue-a), while Training Job B is associated with the high-priority queue (queue-b).

Allocation phase

Alt text: After capturing the cluster snapshot, the scheduler begins processing the submitted jobs. It first attempts the allocation action, prioritizing the highest-priority queue. As a result, Training Job B is scheduled on Node 3.

The scheduler orders the pending jobs by priority. In this case, Training Job B is handled first because its queue is ranked higher in terms of urgency. Of course, the job ordering process is more sophisticated than a simple priority sort. The scheduler uses advanced strategies to ensure fairness and efficiency.

- For Training Job B, Node 3 qualifies as it has three contiguous free GPUs. The scheduler binds Training Job B to Node 3, and Node 3 becomes fully occupied.

- Next, the scheduler attempts to allocate Training Job A. However, none of the nodes provide four contiguous free GPUs.

Consolidation phase

Alt text: To accommodate Training Job A, the scheduler attempts to allocate four GPUs. However, no node currently has four available GPUs. As a result, the scheduler enters the consolidation phase, relocating Training Job 2 from Node 1 to Node 2. This frees up space on Node 1, enabling Training Job A to be scheduled there.

BecauseTraining Job A could not be scheduled with the allocation action, the scheduler enters the consolidation phase. It inspects nodes to see if it can reallocate running pods to form a contiguous block for Training Job A.

- In Node 1, aside from the Training Job 1 (which must remain due to high priority), there’s Training Job 2 occupying two GPUs. The scheduler relocates Training Job 2 from Node 1 to Node 2.

- After this consolidation move, Node 1’s free GPU count increased from two to four contiguous GPUs.

- With this new layout, Training Job A can now be allocated to Node 1, satisfying its requirement of four contiguous GPUs.

In this scenario, the consolidation action was enough that neither reclaim nor preempt had to be invoked. However, if there were an additional pending job from a queue below its fair share with no consolidation path available, the scheduler’s reclaim or preempt actions would then kick in—reclaiming resources from over-allocated queues or from lower-priority jobs in the same queue to restore balance.

Status updates

The status of the queues are updated and the whole cycle starts from the beginning again to ensure the schedule can keep up with the new workloads.

Community collaboration

KAI Scheduler isn’t just a prototype. It’s the robust engine at the heart of the NVIDIA Run:ai platform, trusted by many enterprises and powering critical AI operations. With its proven track record, the KAI Scheduler sets the gold standard for AI workload orchestration.

We invite enterprises, startups, research labs, and open-source communities to experiment with the scheduler in your own settings and contribute learnings. At KubeCon 2025, join us on the /NVIDIA/KAI-scheduler GitHub repo, give the project a star, install it, and share your real-world experiences. Your feedback is invaluable to us as we continue to push the boundaries of what AI infrastructure can do.

NVIDIA is excited to participate in KubeCon April 1–4 in London, UK. Come engage us at Booth S750 for live conversations and demonstrations. For more information about what we are working on, including our 15+ speaking sessions, see NVIDIA at KubeCon.

Figure 1. KAI Scheduler workflow