Software-defined power architectures can deliver significant gains in efficiency and uptime for data centers and network infrastructure. Early adopters have an opportunity to gain first-mover advantage.

Introduction: Infrastructure Under Pressure

Modern lifestyles and work patterns are increasingly dependent on storing, accessing, processing and sharing data. With our smart digital devices, we can create content at will, and publish instantaneously via the Internet. Modern businesses, of course, are huge users of online data, as are government agencies responsible for maintaining security and improving delivery of public services.

Data center traffic is set to exceed 8.6 Zettabytes by 2018 According to figures from Cisco, and mobile data traffic is growing even faster than fixed IP traffic. The move to 5G will accelerate this trend.

Our dependence on data is set to increase further, as the Internet of Things becomes the Internet of Everything, and further aspects of life – including our mobility – become increasingly electronically managed. Autonomous cars will bring unprecedented demand for collecting, analyzing, sharing and storing data, especially as numerous vehicles and highway sensors will monitor the same events from different perspectives. We can contemplate a future containing trillions of sensors, collecting and passing data continuously. Moreover, impatient consumers and real-time services such as smart driving will demand immediate responses, ratcheting up the pressure on the infrastructure even further.

Figure 1. The Internet of Everything is permeating nearly every type of application, leading to a future with trillions of sensors, all collecting, sharing and analyzing data.

Many companies are striving to keep up with relentlessly growing demand for data handling, processing and storage, while at the same time acting to control operating costs. To make matters worse, our growing reliance on data services in all aspects of life means the consequences of any outages are increasingly serious. The networks and data centers underpinning modern life all around the world absolutely must maintain the utmost reliability and ensure maximum uptime.

It is also worth mentioning that not all of the vast numbers of sensors anticipated by forecasters will be used to enhance already comfortable lifestyles: industry-backed events such as the TSensors Summit have envisioned many trillions of networked sensors being used to help combat shortages of food, energy, water, healthcare and education in the world’s most deprived areas.

Demand for Power

Keeping computers supplied with energy is the most significant operating cost for today’s data-service providers. Data center operators know that it costs more to power a server over its typical lifetime of three years than to purchase the hardware outright. In addition, the cost of measures such as air conditioning to maintain recommended operating temperatures also accounts for a significant portion of operating costs. Operators are so keen to address these costs they are deliberately setting up operations in colder climates such as Scandinavia and North-Western America with nearby sources of low-cost, reliable energy such as hydroelectric generators.

The always-connected nature of modern life ensures that the demands placed on the data infrastructure are extremely dynamic. For example, social channels can react instantaneously to world events happening in real-time, such as natural disasters or political crises, or even major sporting events, causing significant surges in traffic volumes. The infrastructure needs to be able to operate at optimum efficiency under all conditions, and handle rapid transitions from minimum to maximum activity without compromising service availability. Adaptive power management is essential to meet the world’s data needs going forward.

The Move to Software-Defined Power

Data center architects have successfully used virtualization to increase server utilization, thereby helping reduce both capital costs and cost of powering idle servers. With virtualization, the computing architecture has become software defined. At the same time, power supply design has moved forward.

Many operators of data centers and network infrastructure are in the process of moving from relatively inflexible analog technology to digital power, which is more readily adaptable to ensure optimum efficiency. However there is still a tendency to view digital converters in much the same way as their analog predecessors. This risks exploiting just a fraction of the potential these digital converters offer to boost efficiency and cut operating costs. The next logical step is for the power architecture to become software defined, taking advantage of digital power adaptability and introducing software control to manage the power supply continuously as operating conditions change.

A software-defined power architecture has potential benefits for power supply designers and developers, as well as data center operators. The ability to adapt designs at the software level promises to remove hardware-design risks and allow faster project completion in the future. Fortunately, the move from analog power architectures to software defined power delivery can be implemented quickly and easily without the need to develop new skills or design IP.

In practice, software-defined power architectures will be able to overcome many of the challenges currently facing data center designers and operators. Digital power architectures already allow fine-tuning of intermediate bus and point-of-load output voltages to optimize operating efficiency taking into account the small differences that exist between individual boards due to semiconductor process variations. The effects of temperature changes can also be compensated. Handing over this type of adjustment to software control can deliver valuable improvement in overall efficiency. This, however, is just one small aspect of the improvements that are possible. A software-defined power architecture can realize further significant efficiency gains and also help to improve other performance metrics such as reliability and uptime, for example by reducing the stress on power supplies during times of low demand and enabling predictive maintenance. Moreover, the software-defined power architecture can adapt on the fly to meet demand by activating or de-activating power supplies, and can adjust system voltages for optimum efficiency.

Today’s processors use Adaptive Voltage Scaling (AVS) to adjust their power demand autonomously according to the processing load applied. At times of low load, both the supply voltage and operating frequency can be reduced to the minimum needed to perform the required tasks. Support for Adaptive Voltage Scaling Bus (AVSBus™) is built into the latest PMBus™ standard version 1.3, the open-standard protocol for communication between power devices. Support for AVSBus allows processors to adjust the appropriate POL output voltages autonomously, thereby affording another ‘arrow in the quiver’ in addition to the previously available PMBus commands.

Automatically adjusting the power architecture to ensure optimum operating efficiency under changing conditions not only serves to minimize energy conversion losses in the power supply but also minimizes demand for cooling and hence the energy consumed by active cooling systems such as tray fans and server-room air conditioning. In addition to saving energy, reducing the demand for cooling allows more real estate to be devoted to installing servers, switches or linecards.

At the same time, the software-defined power architecture benefits from increased reliability. Continually adjusting the system to maintain optimum efficiency and minimize heat generation reduces the stress imposed on Intermediate Bus Converters (IBC) and Point-Of-Load (POL) converters. Ultimately, this minimizes equipment downtime due to power-supply failures.

Software-Defined Power in Practice

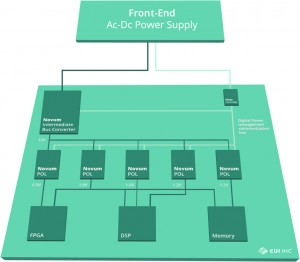

Connectivity provides the catalyst for transforming power converters from isolated islands into elements of a coordinated system capable of responding to the commands of a central controller at the heart of the software-defined power architecture. The open-standard PMBus is an ideal medium. PMBus-compatible front-end ac-dc power supplies, digital POLs and IBCs are already in the market, and provide a critical enabler for power-supply vendors to begin building software-defined power architectures for customers.

Figure 2. The open-standard PMBus provides an ideal medium for connecting converters in a software-defined power architecture.

Software-defined power is rapidly gaining adoption in value-added power delivery applications. In these systems the characteristics of digital power are already providing economic benefits, both to design houses and end customers.

However, design teams need time to create models and develop algorithms to take advantage of the ‘power’ of software-defined power delivery. Some may implement software control at the board level first, and bring the rack and high-power conversion stages into the software-defined architecture later. On the other hand, some may begin at the high-power stage and extend software control forward to the rack and board levels. In addition, early control algorithms may be relatively simple, predicated on only a small number of parameters, and become more complex over time by analyzing larger data sets in greater detail.

With increasing familiarity, and a growing repertoire of proven designs that can be reused quickly and efficiently in subsequent projects, tomorrow’s designers will be able to deliver new projects quickly and focus their attention on developing extra value-added features. These could include predictive maintenance, which can help enhance key performance metrics such as uptime, while at the same time bringing the advantages of lower capital costs by reducing equipment replacement rates.

CUI has implemented PMBus connectivity in front-end AC-DC power supplies such as the 3kW PSE-3000, and in the Novum® families of digital IBCs and non-isolated dc-dc digital POL converters. The ability to communicate with the converters using an industry-standard protocol enables designers to build connected, digitally-controllable power architectures.

The IBCs are available in various standard brick sizes and support Dynamic Bus Voltage (DBV), which allows adjustment of the IBC voltage to optimize conversion efficiency as load conditions change. The voltage can be set via the PMBus or by power-optimizing firmware hosted on the IBC’s integrated ARM®-based microcontroller. DBV at the IBC level, like AVS at the point of load, is highly effective in maximizing efficiency by adjusting the power envelope to suit the load conditions thereby minimizing wasted energy. In addition to setting the IBC voltage, the PMBus interface also allows central control of voltage margining, fault management, precision delay ramp-up, and start/stop.

The Novum POL converters allow PMBus commands to control similar aspects as in the IBC modules and also allow software control of voltage sequencing and tracking.

To give an idea of the level of energy savings possible by automatically adapting the power architecture, consider the combination of a front-end AC/DC power supply with average efficiency of 95%, an IBC operating at 93%, and a POL operating at 88%. Across all three converters, 22.2% of the input power is dissipated as heat. If the efficiency of each stage could be increased by just 1%, the energy lost could be cut to 19.6%. This represents a 12% improvement, which is a significant saving. Moreover, the reduction in the cooling burden brings additional energy savings.

The Future

As software-defined power architectures become the norm in data centers and telecom offices, the industry will need to rely on interoperability between power modules from different vendors. Although the PMBus standardizes inter-module connectivity to an extent, some commands are open to interpretation and may produce different results when used with converters from different manufacturers. To overcome this issue, the AMP GroupTM (Architects of Modern Power) comprising CUI, Ericsson Power Modules and Murata has cooperated to standardize software aspects including responses to PMBus commands, in addition to mechanical details such as form factors and pin configuration.

Figure 3. The AMP Group was founded to collaborate and develop multi-source solutions specifically for software-defined power applications .

Conclusion

The software-defined power architecture offers the efficiency improvements and savings not only during the design phase but also in operating costs that infrastructure owners need. Further advantages include increased reliability and the opportunity to implement predictive maintenance, resulting in greater uptime. The transition from analog-based to software-defined power architectures is occurring rapidly. As power-supply design teams gain familiarity with this technology, they will be able to deliver increasingly sophisticated, efficient and reliable systems quickly and cost-effectively.